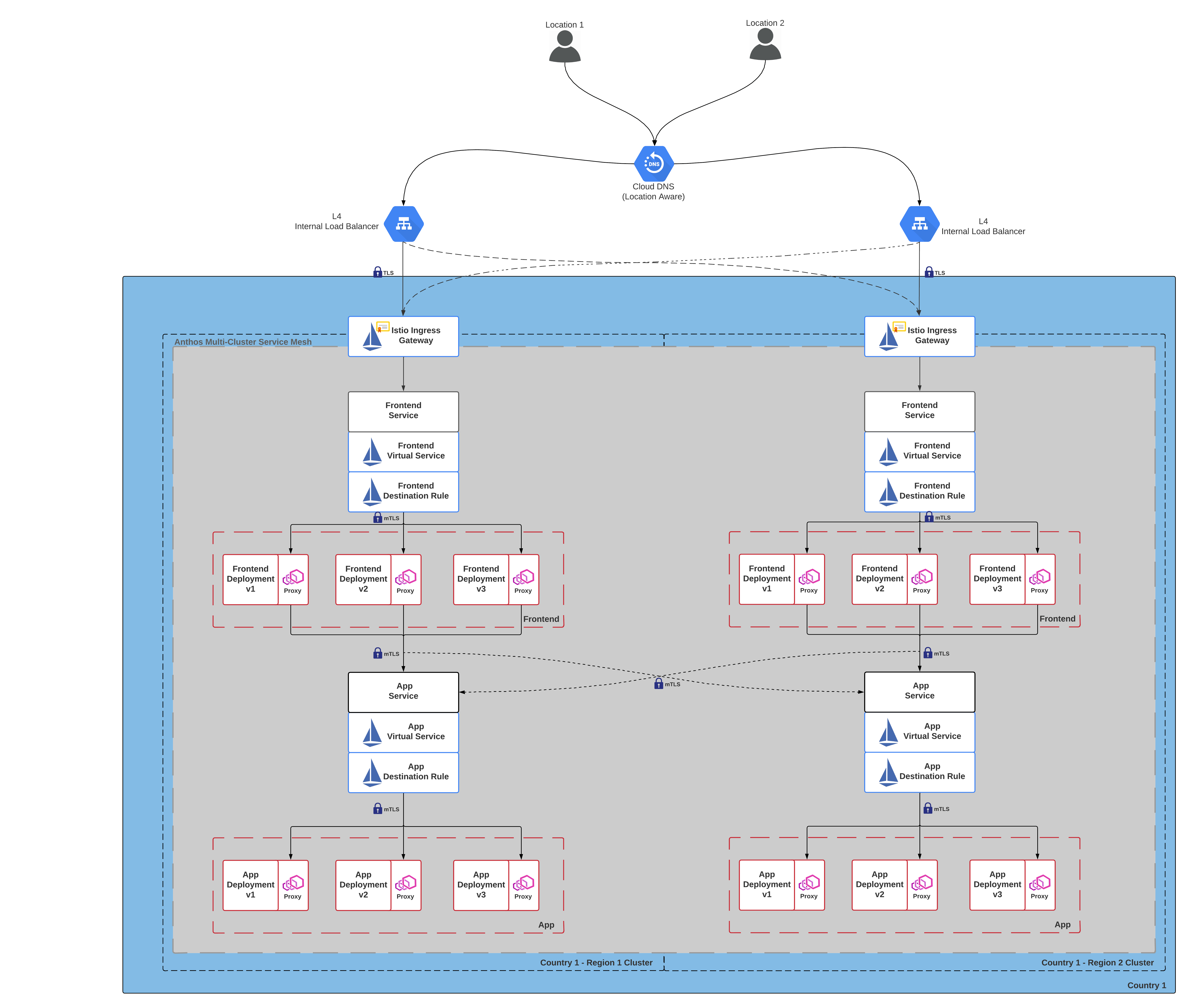

What You Will Create

The guide will set up the following:

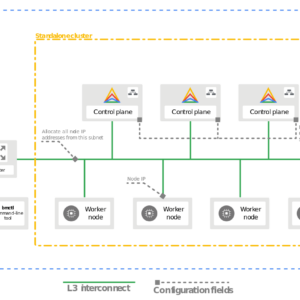

- 2 Private GKE autopilot clusters with master global access

- ASM with multicluster mesh

- IstioIngress gateway to a L4 Internal Load Balancer

- Gateway cert issued with cert-manger and google-cas-issuer requesting certificates from a Google PCA

- Whereami deployment

- Custom routes for control-plane access via VPN (optional)

Pre-requisites

Google APIs

gcloud services enable \

--project=${PROJECT_ID} \

anthos.googleapis.com \

container.googleapis.com \

compute.googleapis.com \

monitoring.googleapis.com \

logging.googleapis.com \

cloudtrace.googleapis.com \

meshca.googleapis.com \

meshtelemetry.googleapis.com \

meshconfig.googleapis.com \

iamcredentials.googleapis.com \

gkeconnect.googleapis.com \

gkehub.googleapis.com \

multiclusteringress.googleapis.com \

multiclusterservicediscovery.googleapis.com \

stackdriver.googleapis.com \

trafficdirector.googleapis.com \

cloudresourcemanager.googleapis.com \

privateca.googleapis.com

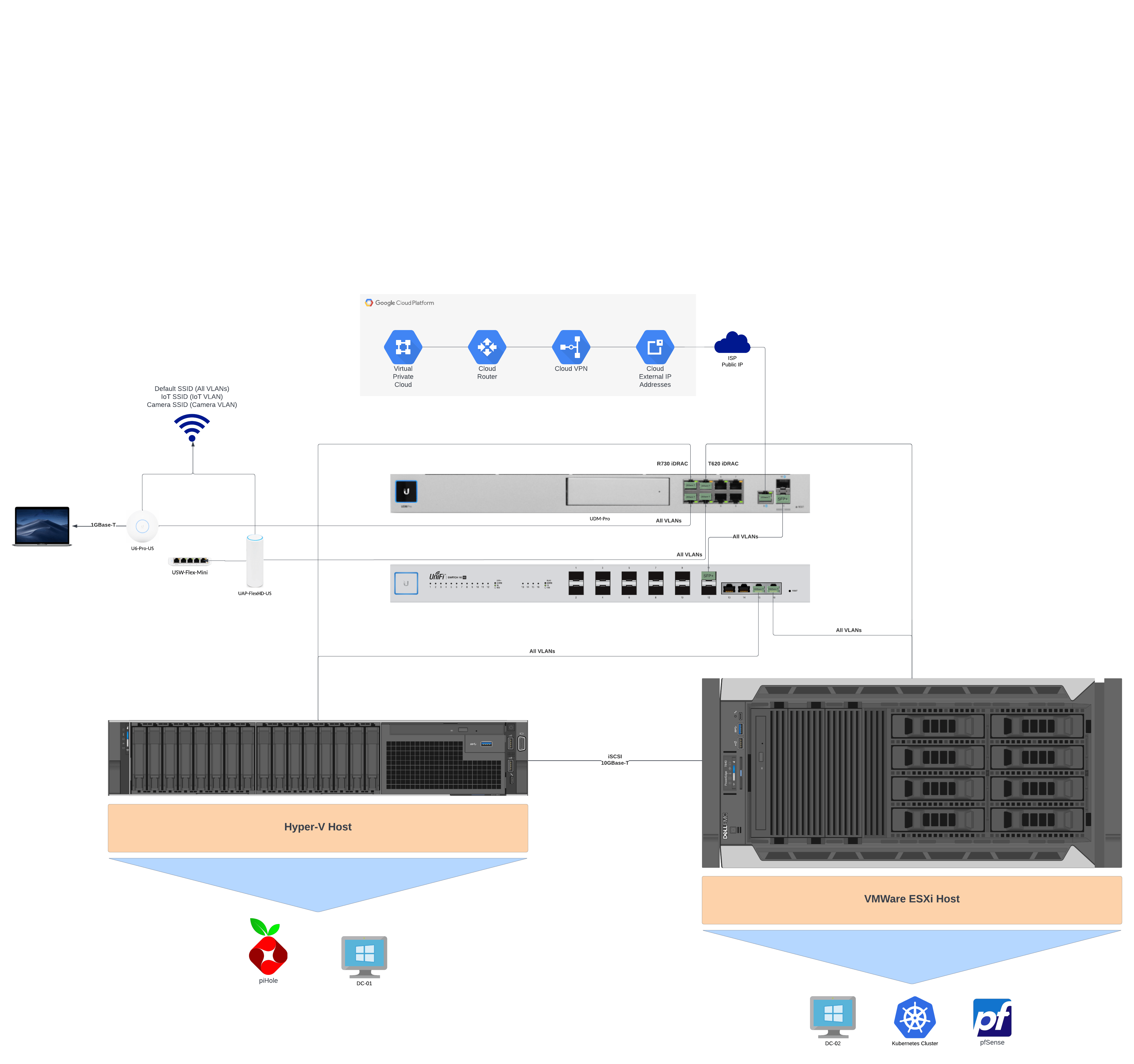

VPC Network

You should have a VPC created with global dynamic routing enabled, with subnets created for the locations of your clusters.

If you are using a VPN to manage the clusters and want to resolve to your internal DNS, a DNS policy with inbound query forwarding should be created to allow on prem hosts to resolve your internal Cloud DNS host names.

Cluster Setup

This guide will rely primary on shell scripts to provide insight into each step and allow for the comprehension of a manually configured process.

Environment Variables

The remainder of the scripts will reference the environment variables in this section.

#!/bin/bash

# Enter your project ID below

export PROJECT_ID=jc-project

# Copy and paste the rest below

gcloud config set project ${PROJECT_ID}

export PROJECT_NUM=$(gcloud projects describe ${PROJECT_ID} --

format='value(projectNumber)')

export CLUSTER_NETWORK=jc-vpc

export CLUSTER_1=jc-us-west2

export CLUSTER_1_REGION=us-west2

export CLUSTER_1_SUBNET=jc-us-west2

export CLUSTER_1_CIDR=10.222.0.0/20

export CLUSTER_1_PODCIDR=10.4.0.0/14

export CLUSTER_1_SERVICESCIDR=10.49.0.0/16

export CLUSTER_2=jc-us-central1

export CLUSTER_2_REGION=us-central1

export CLUSTER_2_SUBNET=jc-us-central1

export CLUSTER_2_PODCIDR=10.0.0.0/14

export CLUSTER_2_SERVICESCIDR=10.48.0.0/16

export WORKLOAD_POOL=${PROJECT_ID}.svc.id.goog

export MESH_ID="proj-${PROJECT_NUM}"

export ASM_VERSION=1.13

export ASM_LABEL=asm-managed-rapid

export ASM_RELEASE_CHANNEL=rapid

export ASM_PACKAGE=istio-1.13.2-asm.2

export ROOT_CA_POOL=jc-root-pool

export CA_LOCATION=us-central1

export ROOT_CA=jc-root

export SUB_CA_POOL_GW=jc-sub-ca-pool-gw

export SUB_CA_POOL=jc-sub-ca-pool

export SUB_CA_POOL_SC=jc-sub-ca-pool-sc

export SUB_CA_SC=jc-sub-ca-sc

echo Setting WORKDIR ENV to pwd

export WORKDIR=`pwd`

Tools

Most of the shell script will be using kubectx to switch through clusters

echo Installing Krew, CTX and NS

(

set -x; cd "$(mktemp -d)" &&

OS="$(uname | tr '[:upper:]' '[:lower:]')" &&

ARCH="$(uname -m | sed -e 's/x86_64/amd64/' -e 's/\(arm\)\(64\)\?.*

/\1\2/' -e 's/aarch64$/arm64/')" &&

curl -fsSLO "https://github.com/kubernetes-sigs/krew/releases

/download/v0.4.1/krew.tar.gz" &&

tar zxvf krew.tar.gz &&

KREW=./krew-"${OS}_${ARCH}" &&

"$KREW" install krew

)

echo -e "export PATH="${PATH}:${HOME}/.krew/bin"" >> ~/.bashrc &&

source ~/.bashrc

kubectl krew install ctx ns

Cluster Creation and Configuration

Cluster Creation

gcloud container clusters create-auto ${CLUSTER_1} \

--project ${PROJECT_ID} \

--region=${CLUSTER_1_REGION} \

--enable-master-authorized-networks \

--enable-private-endpoint \

--enable-private-nodes \

--network=${CLUSTER_NETWORK} \

--subnetwork=${CLUSTER_1_SUBNET} \

--cluster-secondary-range-name=pods \

--services-secondary-range-name=services \

--master-ipv4-cidr=172.31.0.0/28 \

--master-authorized-networks 192.168.0.0/23 --async

gcloud container clusters create-auto ${CLUSTER_2} \

--project ${PROJECT_ID} \

--region=${CLUSTER_2_REGION} \

--enable-master-authorized-networks \

--enable-private-endpoint \

--enable-private-nodes \

--network=${CLUSTER_NETWORK} \

--subnetwork=${CLUSTER_2_SUBNET} \

--cluster-secondary-range-name=pods \

--services-secondary-range-name=services \

--master-ipv4-cidr=172.31.0.16/28 \

--master-authorized-networks 192.168.0.0/23

The master authorized networks include entries for my workstation’s IP(through VPN) this step is optional, if you are using a bastion you would need to add either the bastion’s /32 ip or the entire subnet range the bastion is on.

VPN Route Exports (optional)

In order for the cluster master endpoint to be advertised through BGP in a VPN scenario, the GKE peering network rules must be patched to allow exporting and importing of custom routes.

export PEER=`gcloud compute networks peerings list --

network=${CLUSTER_NETWORK} --format="value(peerings.name)" | sed 's/;/

/g'`

for p in $=PEER

do

gcloud compute networks peerings update $p --

network=${CLUSTER_NETWORK} --export-custom-routes --import-custom-routes

done

Patching Cluster for Master Global Access

Since autopilot clusters created with the gcloud command do no accept the flag enable-master-global-access the cluster must be patched to allow clients not on your subnet to access the cluster master, this is needed in the case of cross cluster service discovery(Anthos Service Mesh) and VPN gateways residing in a different subnet then the cluster.

gcloud container clusters update ${CLUSTER_1} \

--region=${CLUSTER_1_REGION} \

--enable-master-global-access \

gcloud container clusters update ${CLUSTER_1} \

--region=${CLUSTER_1_REGION} \

--update-labels=mesh_id=${MESH_ID},deployer=jonchen

gcloud container clusters update ${CLUSTER_2} \

--region=${CLUSTER_2_REGION} \

--enable-master-global-access \

gcloud container clusters update ${CLUSTER_2} \

--region=${CLUSTER_2_REGION} \

--update-labels=mesh_id=${MESH_ID},deployer=jonchen

This script also patches the cluster to include some identifying labels (not necessary), as the create-auto command does not allow for the label flag.

Kube Config Setup

Since most of the remainder scripts will be using different contexts this will setup the required credentials and contexts to accommodate that.

touch ${WORKDIR}/asm-kubeconfig && export KUBECONFIG=${WORKDIR}/asm-

kubeconfig

gcloud container clusters get-credentials ${CLUSTER_1} --zone

${CLUSTER_1_REGION}

gcloud container clusters get-credentials ${CLUSTER_2} --zone

${CLUSTER_2_REGION}

kubectl ctx ${CLUSTER_1}=gke_${PROJECT_ID}_${CLUSTER_1_REGION}

_${CLUSTER_1}

kubectl ctx ${CLUSTER_2}=gke_${PROJECT_ID}_${CLUSTER_2_REGION}

_${CLUSTER_2}

NAT Setup

Private clusters need a NAT to be able to access the internet, this is required if any of the images you are pulling are external to google even if your cluster does not need internet access.

gcloud compute routers create ${CLUSTER_NETWORK}-${CLUSTER_1_REGION}-nat-router \

--network ${CLUSTER_NETWORK} \

--region ${CLUSTER_1_REGION}

gcloud compute routers create ${CLUSTER_NETWORK}-${CLUSTER_2_REGION}-nat-router \

--network ${CLUSTER_NETWORK} \

--region ${CLUSTER_2_REGION}

gcloud compute routers nats create ${CLUSTER_NETWORK}-${CLUSTER_1_REGION}-nat \

--router-region ${CLUSTER_1_REGION} \

--router ${CLUSTER_NETWORK}-${CLUSTER_1_REGION}-nat-router \

--nat-all-subnet-ip-ranges \

--auto-allocate-nat-external-ips

gcloud compute routers nats create ${CLUSTER_NETWORK-${CLUSTER_2_REGION}-nat \

--router-region ${CLUSTER_2_REGION} \

--router ${CLUSTER_NETWORK}-${CLUSTER_2_REGION}-nat-router \

--nat-all-subnet-ip-ranges \

--auto-allocate-nat-external-ips

Installing ASM

I will cover the install with asmcli.

curl https://storage.googleapis.com/csm-artifacts/asm

/asmcli_$ASM_VERSION > asmcli

chmod +x asmcli

kubectl --context=${CLUSTER_1} create clusterrolebinding cluster-admin-binding \

--clusterrole=cluster-admin \

--user=[your GCP email]

kubectl --context=${CLUSTER_2} create clusterrolebinding cluster-admin-binding \

--clusterrole=cluster-admin \

--user=[your GCP email]

./asmcli install \

-p $PROJECT_ID \

-l $CLUSTER_1_REGION \

-n $CLUSTER_1 \

--fleet_id $PROJECT_ID \

--managed \

--verbose \

--output_dir $CLUSTER_1 \

--use_managed_cni \

--enable-all \

--channel $ASM_RELEASE_CHANNEL

./asmcli install \

-p $PROJECT_ID \

-l $CLUSTER_2_REGION \

-n $CLUSTER_2 \

--fleet_id $PROJECT_ID \

--managed \

--use_managed_cni \

--verbose \

--output_dir $CLUSTER_2 \

--enable-all \

--channel $ASM_RELEASE_CHANNEL

Specific to autopilot clusters the flag use_managed_cni needs to be set as well as the channel needs to be a channel that supports autopilot clusters, as of right now rapid supports autopilot clusters.

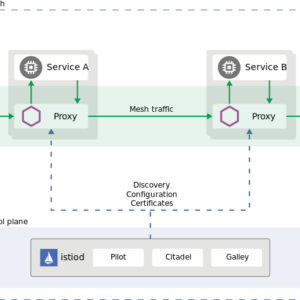

Cross Cluster Service Discovery

Secret Generation

In order to enable cross cluster service discovery (Anthos Multicluster Mesh) the public endpoint must be used when creating a secret. A private cluster has a hidden public endpoint that refers to the endpoint google uses to manage the service mesh, the script below will extract that value and create a secret with that endpoint.

export ISTIOCTL_CMD=${WORKDIR}/${CLUSTER_1}/${ASM_PACKAGE}/bin/istioctl

PUBLIC_IP=`gcloud container clusters describe "${CLUSTER_1}" --project "${PROJECT_ID}" \

--zone "${CLUSTER_1_REGION}" --format "value(privateClusterConfig.publicEndpoint)"`

${ISTIOCTL_CMD} x create-remote-secret --context=${CLUSTER_1} --name=${CLUSTER_1} --server=https://${PUBLIC_IP} > secret-kubeconfig-${CLUSTER_1}.yaml

PUBLIC_IP=`gcloud container clusters describe "${CLUSTER_2}" --project "${PROJECT_ID}" \

--zone "${CLUSTER_2_REGION}" --format "value(privateClusterConfig.publicEndpoint)"`

${ISTIOCTL_CMD} x create-remote-secret --context=${CLUSTER_2} --name=${CLUSTER_2} --server=https://${PUBLIC_IP} > secret-kubeconfig-${CLUSTER_2}.yaml

kubectl --context=${CLUSTER_2} -n istio-system apply -f secret-kubeconfig-${CLUSTER_1}.yaml

kubectl --context=${CLUSTER_1} -n istio-system apply -f secret-kubeconfig-${CLUSTER_2}.yaml

Master Authorized Network Configuration

For cross cluster service discovery to work, the pod of the clusters need to be able to access the master endpoint to discover services in the other cluster.

POD_IP_CIDR_1=`gcloud container clusters describe ${CLUSTER_1} --project ${PROJECT_ID} --zone ${CLUSTER_1_REGION} \

--format "value(ipAllocationPolicy.clusterIpv4CidrBlock)"`

POD_IP_CIDR_2=`gcloud container clusters describe ${CLUSTER_2} --project ${PROJECT_ID} --zone ${CLUSTER_2_REGION} \

--format "value(ipAllocationPolicy.clusterIpv4CidrBlock)"`

EXISTING_CIDR_1=`gcloud container clusters describe ${CLUSTER_1} --project ${PROJECT_ID} --zone ${CLUSTER_1_REGION} \

--format "value(masterAuthorizedNetworksConfig.cidrBlocks.cidrBlock)"`

gcloud container clusters update ${CLUSTER_1} --project ${PROJECT_ID} --zone ${CLUSTER_1_REGION} \

--enable-master-authorized-networks \

--master-authorized-networks ${POD_IP_CIDR_2},${EXISTING_CIDR_1//;/,}

EXISTING_CIDR_2=`gcloud container clusters describe ${CLUSTER_2} --project ${PROJECT_ID} --zone ${CLUSTER_2_REGION} \

--format "value(masterAuthorizedNetworksConfig.cidrBlocks.cidrBlock)"`

gcloud container clusters update ${CLUSTER_2} --project ${PROJECT_ID} --zone ${CLUSTER_2_REGION} \

--enable-master-authorized-networks \

--master-authorized-networks ${POD_IP_CIDR_1},${EXISTING_CIDR_2//;/,}

Firewall Rules

Firewall rules need to be created to allow for cross cluster communications. Since this cluster is an autopilot cluster the target tags are not necessary due to autopilot not creating GCE backed nodes, the script should still create the firewall rule but just tell you that target-tags is empty.

function join_by { local IFS="$1"; shift; echo "$*"; }

ALL_CLUSTER_CIDRS=$(for P in $PROJECT_ID; do gcloud --project $P container clusters list --filter="name:($CLUSTER_1,$CLUSTER_2)" --format='value(clusterIpv4Cidr)'; done | sort | uniq)

ALL_CLUSTER_CIDRS=$(join_by , $(echo "${ALL_CLUSTER_CIDRS}"))

ALL_CLUSTER_NETTAGS=$(for P in $PROJECT_ID; do gcloud --project $P compute instances list --filter="name:($CLUSTER_1,$CLUSTER_2)" --format='value(tags.items.[0])' ; done | sort | uniq)

ALL_CLUSTER_NETTAGS=$(join_by , $(echo "${ALL_CLUSTER_NETTAGS}"))

gcloud compute firewall-rules create istio-multicluster-pods \

--network=${CLUSTER_NETWORK} \

--allow=tcp,udp,icmp,esp,ah,sctp \

--direction=INGRESS \

--priority=900 \

--source-ranges="${ALL_CLUSTER_CIDRS}" \

--target-tags="${ALL_CLUSTER_NETTAGS}" --quiet

Whereami Deployment

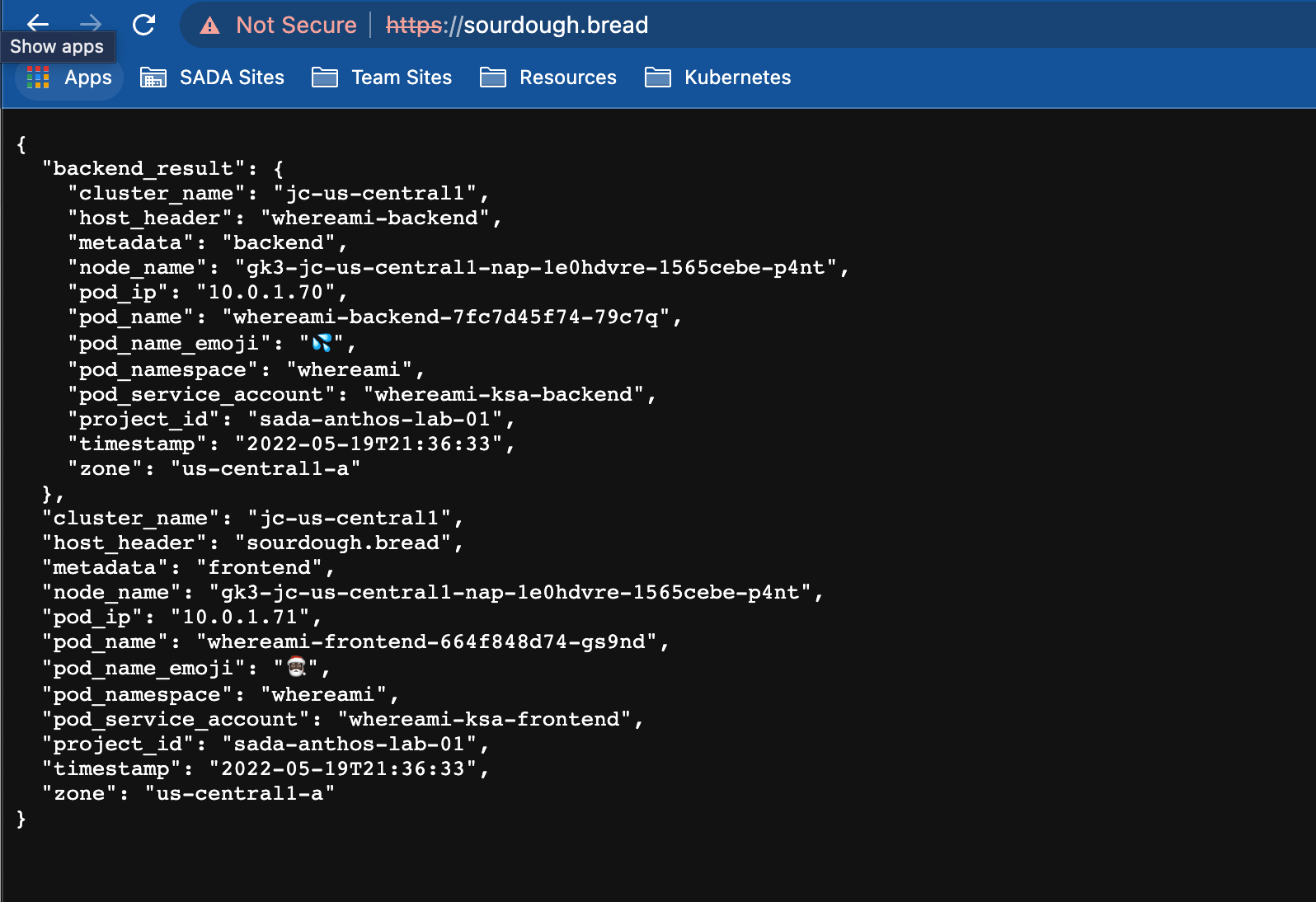

The app used in this tutorial is whereami, this allows us to easily determine the pod locations.

Namespace Deployment

The namespace needs to contain the annotation mesh.cloud.google.com/proxy: '{"managed":"true"}' and the label istio.io /rev: ${ASM_LABEL}.

cat <<EOF > ${WORKDIR}/namespace-whereami.yaml

apiVersion: v1

kind: Namespace

metadata:

name: whereami

annotations:

mesh.cloud.google.com/proxy: '{"managed":"true"}'

labels:

istio.io/rev: ${ASM_LABEL}

EOF

for CLUSTER in $CLUSTER_1 $CLUSTER_2; do

echo -e "\nCreating whereami namespace in $CLUSTER"

if [ -z "$CLUSTER" ]; then echo ""; else

kubectl --context=${CLUSTER} apply -f ${WORKDIR}/namespace-whereami.yaml;

fi

done

Whereami deployment

The script below deploys whereami, to the respective namespace and checks for the status of deployments.

git clone https://github.com/theemadnes/gke-whereami.git whereami

for CLUSTER in $CLUSTER_1 $CLUSTER_2; do

echo -e "\nDeploying whereami in $CLUSTER cluster..."

if [ -z "$CLUSTER" ]; then echo ""; else

kubectl --context=${CLUSTER} apply -k whereami/k8s-backend-overlay-example/ -n whereami

kubectl --context=${CLUSTER} apply -k whereami/k8s-frontend-overlay-example/ -n whereami;

fi

done

unset SERVICES

SERVICES=(whereami-frontend whereami-backend)

for CLUSTER in $CLUSTER_1 $CLUSTER_2; do

echo -e "\nChecking deployments in $CLUSTER cluster..."

for SVC in "${SERVICES[@]}"; do

kubectl --context=${CLUSTER} -n whereami wait --for=condition=available --timeout=5m deployment $SVC

done

done

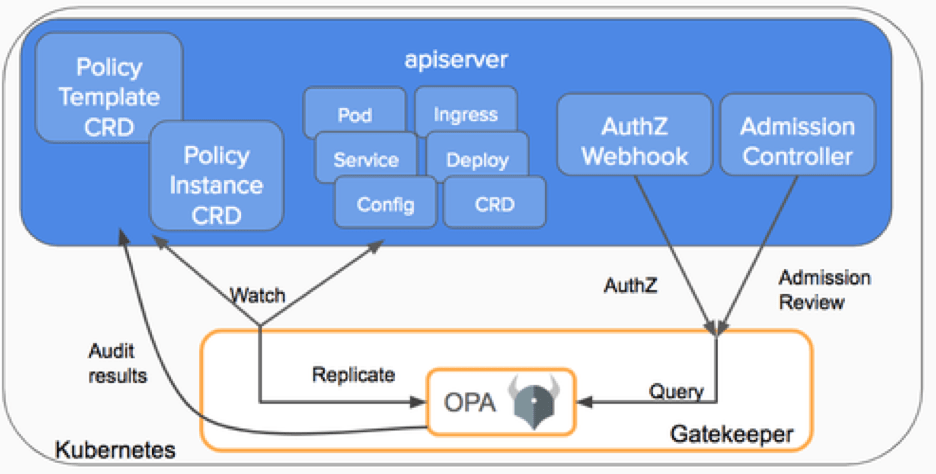

mTLS

I found a bug that when you create a mesh wide PeerAuthentication , the policy isn’t respected so below applies STRICT mTLS to the whereami namespace.

kubectl apply --context=${CLUSTER_1} -f - <<EOF

apiVersion: "security.istio.io/v1beta1"

kind: "PeerAuthentication"

metadata:

name: "mTLS"

namespace: "whereami"

spec:

mtls:

mode: STRICT

EOF

kubectl apply --context=${CLUSTER_2} -f - <<EOF

apiVersion: "security.istio.io/v1beta1"

kind: "PeerAuthentication"

metadata:

name: "mTLS"

namespace: "whereami"

spec:

mtls:

mode: STRICT

EOF

Cross Cluster Testing

The following script deploys out a sleep pod to the whereami namespace and repeatedly curls the whereami endpoint until the returned region differs then the originating region.

for CLUSTER in ${CLUSTER_1} ${CLUSTER_2}

do

echo "\e[1;92mTesting connectivity from $CLUSTER to all other clusters...\e[0m"

echo "\e[96mDeploying curl utility in $CLUSTER cluster...\e[0m"

kubectl --context=${CLUSTER} -n whereami apply -f https://raw.githubusercontent.com/istio/istio/master/samples/sleep/sleep.yaml

kubectl --context=${CLUSTER} -n whereami wait --for=condition=available deployment sleep --timeout=5m

echo "\n"

for CLUSTER_REGION in ${CLUSTER_1_REGION} ${CLUSTER_2_REGION}

do

echo "\e[92mTesting connectivity from $CLUSTER to $CLUSTER_REGION...\e[0m"

SLEEP_POD=`kubectl --context=${CLUSTER} -n whereami get pod -l app=sleep -o jsonpath='{.items[0].metadata.name}'`

REGION=location

while [[ "$REGION" != "$CLUSTER_REGION" ]]

do

REGION=`kubectl --context=${CLUSTER} -n whereami exec -i -c sleep $SLEEP_POD -- curl -s whereami-frontend.whereami:80 | jq -r '.zone' | rev | cut -c 3- | rev`

echo "$CLUSTER -> $REGION"

done

echo "\e[96m$CLUSTER can access $REGION\n\e[0m"

done

echo "\n"

done

Cert-manager Configuration

Since a google managed certificate or a certificate verified by a third party is not possible with an internal dns name we must create our own certificate authority and have GKE issue and manage certificates through that authority. This can be accomplished by using cert-manager with the google-cas-issuer deployment.

Private Certificate Authority Configuration

The script below will create all the required resources necessary to issue certificates to your clusters ingress.

Since autopilot clusters do not support external created mesh certificates we will be setting the preset profile for a tls subordinate server rather then mtls.

gcloud privateca pools create $ROOT_CA_POOL \

--location $CA_LOCATION \

--tier enterprise

gcloud privateca roots create $ROOT_CA \

--auto-enable \

--key-algorithm ec-p384-sha384 \

--location $CA_LOCATION \

--pool $ROOT_CA_POOL \

--subject "CN=Example Root CA, O=Example Organization" \

--use-preset-profile root_unconstrained

gcloud privateca pools create $SUB_CA_POOL_GW \

--location $CA_LOCATION \

--tier devops

gcloud privateca subordinates create $SUB_CA_POOL \

--auto-enable \

--issuer-location $CA_LOCATION \

--issuer-pool $ROOT_CA_POOL \

--key-algorithm ec-p256-sha256 \

--location $CA_LOCATION \

--pool $SUB_CA_POOL_GW \

--subject "CN=Example Gateway mTLS CA, O=Example Organization" \

--use-preset-profile subordinate_server_tls_pathlen_0

cat << EOF > policy.yaml

baselineValues:

keyUsage:

baseKeyUsage:

digitalSignature: true

keyEncipherment: true

extendedKeyUsage:

serverAuth: true

clientAuth: true

caOptions:

isCa: false

identityConstraints:

allowSubjectPassthrough: false

allowSubjectAltNamesPassthrough: true

celExpression:

expression: subject_alt_names.all(san, san.type == URI && san.value.startsWith("spiffe://PROJECT_ID.svc.id.goog/ns/") )

EOF

gcloud privateca pools create $SUB_CA_POOL_SC \

--issuance-policy policy.yaml \

--location $CA_LOCATION \

--tier devops

gcloud privateca subordinates create $SUB_CA_SC \

--auto-enable \

--issuer-location $CA_LOCATION \

--issuer-pool $ROOT_CA_POOL \

--key-algorithm ec-p256-sha256 \

--location $CA_LOCATION \

--pool $SUB_CA_POOL_SC \

--subject "CN=Example Sidecar mTLS CA, O=Example Organization" \

--use-preset-profile subordinate_mtls_pathlen_0

Cert-manger Install

Since autopilot does not allow for any modifications of the kube-system namespace the leader election yaml’s for cert-manager must be patched in order for cert-manager to be successfully deployed on autopilot.

Below is a kustomization script that does the patching of cert-manager

mkdir cert-manager

cat <<EOF > cert-manager/kustomization.yaml

---

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- https://github.com/jetstack/cert-manager/releases/download/v1.8.0/cert-manager.yaml

patches:

- target:

kind: Role

name: cert-manager:leaderelection

patch: |-

- op: replace

path: /metadata/namespace

value: cert-manager

- target:

kind: Role

name: cert-manager-cainjector:leaderelection

patch: |-

- op: replace

path: /metadata/namespace

value: cert-manager

- target:

kind: RoleBinding

name: cert-manager:leaderelection

patch: |-

- op: replace

path: /metadata/namespace

value: cert-manager

- target:

kind: RoleBinding

name: cert-manager-cainjector:leaderelection

patch: |-

- op: replace

path: /metadata/namespace

value: cert-manager

- target:

kind: Deployment

name: cert-manager

patch: |-

- op: replace

path: /spec/template/spec/containers/0/args/2

value: --leader-election-namespace=cert-manager

- target:

kind: Deployment

name: cert-manager-cainjector

patch: |-

- op: replace

path: /spec/template/spec/containers/0/args/1

value: --leader-election-namespace=cert-manager

EOF

kubectl --context=${CLUSTER_1} apply -k cert-manager

kubectl --context=${CLUSTER_2} apply -k cert-manager

Google CAS Configuration

Since we are using the Google CAS we need to install the google-cas-issuer and assign the proper iam permissions to the service account to allow certificates to be requested and issued from our private certificate authority.

kubectl --context=${CLUSTER_1} apply -f https://github.com/jetstack/google-cas-issuer/releases/download/v0.5.3/google-cas-issuer-v0.5.3.yaml

kubectl --context=${CLUSTER_2} apply -f https://github.com/jetstack/google-cas-issuer/releases/download/v0.5.3/google-cas-issuer-v0.5.3.yaml

gcloud iam service-accounts create sa-google-cas-issuer

gcloud privateca pools add-iam-policy-binding $SUB_CA_POOL_GW --role=roles/privateca.certificateRequester --member="serviceAccount:sa-google-cas-issuer@$(gcloud config get-value project | tr ':' '/').iam.gserviceaccount.com" --location=us-central1

gcloud iam service-accounts add-iam-policy-binding \

--role roles/iam.workloadIdentityUser \

--member "serviceAccount:$PROJECT_ID.svc.id.goog[cert-manager/ksa-google-cas-issuer]" \

sa-google-cas-issuer@${PROJECT_ID:?PROJECT is not set}.iam.gserviceaccount.com

kubectl --context=${CLUSTER_1} annotate serviceaccount \

--namespace cert-manager \

ksa-google-cas-issuer \

iam.gke.io/gcp-service-account=sa-google-cas-issuer@${PROJECT_ID:?PROJECT is not set}.iam.gserviceaccount.com \

--overwrite=true

kubectl --context=${CLUSTER_2} annotate serviceaccount \

--namespace cert-manager \

ksa-google-cas-issuer \

iam.gke.io/gcp-service-account=sa-google-cas-issuer@${PROJECT_ID:?PROJECT is not set}.iam.gserviceaccount.com \

--overwrite=true

After we deploy out the google-cas-issuer and assign the proper permissions we need to create a GoogleCASClusterIssuer you can create a namespaced issuer if you desire as well.

cat <<EOF > ${WORKDIR}/googlecasclusterissuer.yaml

apiVersion: cas-issuer.jetstack.io/v1beta1

kind: GoogleCASClusterIssuer

metadata:

name: gateway-cas-issuer-cluster

spec:

project: $PROJECT_ID

location: $CA_LOCATION

caPoolId: $SUB_CA_POOL_GW

EOF

kubectl --context=${CLUSTER_1} apply -f ${WORKDIR}/googlecasclusterissuer.yaml

kubectl --context=${CLUSTER_2} apply -f ${WORKDIR}/googlecasclusterissuer.yaml

Istio Ingress Gateway Configuration

As noted above the the ingress gateway will be configured with a L4 internal load balancer with global access and use a Google CAS issued certificate.

Ingress Gateway Creation

The script below reserves two internal IP addresses and uses istioctl to generate manifests for the gateway then apply’s them to the respective clusters.

kubectl create --context=${CLUSTER_1} namespace asm-gateway

kubectl create --context=${CLUSTER_2} namespace asm-gateway

LOAD_BALANCER_IP1=$(gcloud compute addresses create \

asm-ingress-gateway-ilb-$CLUSTER_1 \

--region $CLUSTER_1_REGION \

--subnet $CLUSTER_1_SUBNET \

--format 'value(address)')

LOAD_BALANCER_IP2=$(gcloud compute addresses create \

asm-ingress-gateway-ilb-$CLUSTER_2 \

--region $CLUSTER_2_REGION \

--subnet $CLUSTER_2_SUBNET \

--format 'value(address)')

cat << EOF > ingressgateway-operator1.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

metadata:

name: ingressgateway-operator

annotations:

config.kubernetes.io/local-config: "true"

spec:

profile: empty

revision: $ASM_LABEL

components:

ingressGateways:

- name: istio-ingressgateway

namespace: asm-gateway

enabled: true

k8s:

overlays:

- apiVersion: apps/v1

kind: Deployment

name: istio-ingressgateway

patches:

- path: spec.template.metadata.annotations

value:

inject.istio.io/templates: gateway

- path: spec.template.metadata.labels.sidecar\.istio\.io/inject

value: "true"

- path: spec.template.spec.containers[name:istio-proxy]

value:

name: istio-proxy

image: auto

service:

loadBalancerIP: $LOAD_BALANCER_IP1

serviceAnnotations:

networking.gke.io/load-balancer-type: Internal

networking.gke.io/internal-load-balancer-allow-global-access: "true"

EOF

cat << EOF > ingressgateway-operator2.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

metadata:

name: ingressgateway-operator

annotations:

config.kubernetes.io/local-config: "true"

spec:

profile: empty

revision: $ASM_LABEL

components:

ingressGateways:

- name: istio-ingressgateway

namespace: asm-gateway

enabled: true

k8s:

overlays:

- apiVersion: apps/v1

kind: Deployment

name: istio-ingressgateway

patches:

- path: spec.template.metadata.annotations

value:

inject.istio.io/templates: gateway

- path: spec.template.metadata.labels.sidecar\.istio\.io/inject

value: "true"

- path: spec.template.spec.containers[name:istio-proxy]

value:

name: istio-proxy

image: auto

service:

loadBalancerIP: $LOAD_BALANCER_IP2

serviceAnnotations:

networking.gke.io/load-balancer-type: Internal

networking.gke.io/internal-load-balancer-allow-global-access: "true"

EOF

./$CLUSTER_1/istioctl manifest generate \

--filename ingressgateway-operator1.yaml \

--output $CLUSTER_1-ingressgateway

./$CLUSTER_2/istioctl manifest generate \

--filename ingressgateway-operator2.yaml \

--output $CLUSTER_2-ingressgateway

kubectl apply --recursive --filename $CLUSTER_1-ingressgateway/

kubectl apply --recursive --filename $CLUSTER_2-ingressgateway/

In the above script you can see that there are two service annotations networking.gke.io/load-balancer-type to tell GKE to create a ILB and networking.gke.io/internal-load-balancer-allow-global-access which enables global access on the endpoint allowing for any subnet in your VPC to access the endpoint.

Gateway and VirtualService Configuration

In the example below I will be creating a gateway that is deployed into asm-gateway namespace that points to the whereami-frontend and attaches a Google CAS issued certificate for the host name sourdough.bread and allows for HTTPS redirects from port 80.

cat << EOF > certificate.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: sourdough-bread-cert

namespace: asm-gateway

spec:

secretName: sourdough-bread-cert-tls

commonName: sourdough.bread

dnsNames:

- "*.sourdough.bread"

duration: 24h

renewBefore: 8h

issuerRef:

group: cas-issuer.jetstack.io

kind: GoogleCASClusterIssuer

name: gateway-cas-issuer-cluster

EOF

kubectl apply --context=${CLUSTER_1} -f certificate.yaml

kubectl apply --context=${CLUSTER_2} -f certificate.yaml

cat <<EOF > ${WORKDIR}/gateway.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: whereami-gateway

namespace: asm-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 443

name: https

protocol: HTTPS

hosts:

- whereami/sourdough.bread

tls:

mode: SIMPLE

credentialName: sourdough-bread-cert-tls

- port:

number: 80

name: http

protocol: HTTP

tls:

httpsRedirect: true

hosts:

- whereami/sourdough.bread

EOF

kubectl apply --context=${CLUSTER_1} -f gateway.yaml

kubectl apply --context=${CLUSTER_2} -f gateway.yaml

cat <<EOF > ${WORKDIR}/virtual-service.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: whereami-frontend

namespace: whereami

spec:

hosts:

- sourdough.bread

gateways:

- asm-gateway/whereami-gateway

http:

- route:

- destination:

host: whereami-frontend

port:

number: 80

EOF

kubectl apply --context=${CLUSTER_1} -f virtual-service.yaml

kubectl apply --context=${CLUSTER_2} -f virtual-service.yaml

echo "$CLUSTER_1_REGION IP: $LOAD_BALANCER_IP1"

echo "$CLUSTER_2_REGION IP: $LOAD_BALANCER_IP2"

Notice that the host reference on the Gateway resource and the gateway reference on the VirtualService resource includes the respective namespaces of the referenced resources. To enable mTLS in you can set the tls mode in the Gateway resource to be MUTUAL for enable mTLS which would require the client to provide the mesh certificate in order to authenticate with the ingress gateway.

The two lines at the end of the script print out the region and the IP for the gateways so that you can add them to your internal DNS zone.

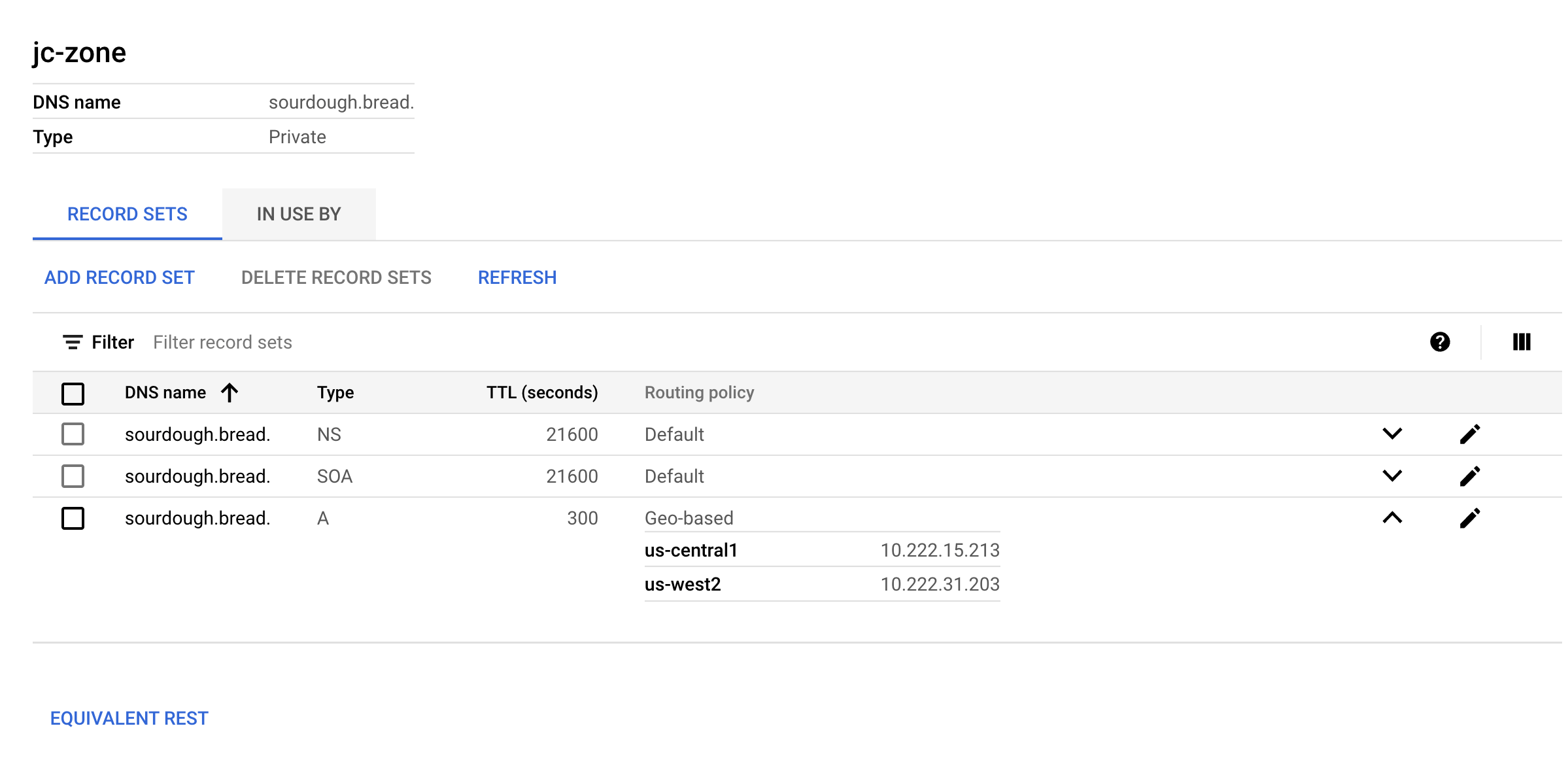

DNS Configuration

Create a Private DNS Zone in your VPC and add a A record set with Geo-based routing for the ingress gateways of your endpoint.

Testing

To test the endpoint you can either go about it one of two ways. You can either access it via a web browser if you have VPN or you can use a curl verbose command.

curl -vk https://sourdough.bread

Of course in both of these cases the certificate will not trusted due to it being a self signed cert, to allow your clients to trust this cert you should install and mark the root CA cert from your Google CAS as trusted.

To view and debug any certificate related issues we can use openssl

the servername flag is required to set the TLS SNI message or else verification will fail.openssl s_client -connect sourdough.bread:443 -servername sourdough.bread -showcerts.

TLDR Takeaways

- Cluster Creation

- Gcloud

- The

gcloudcommand to create a autopilot cluster does not support themaster global accessflag, this needs to be patched after cluster creation

- The

- Terraform

- For ASM module to work. The

deploy_using_private_endpointoption needs to be enabled in the GKE module.

- For ASM module to work. The

- Gcloud

- ASM

- For an autopilot cluster ASM must be installed with a managed CNI option.

- Managed ASM for autopilot is currently only available in the rapid release

- Even though the cluster was configured with only a

private endpoint, it has a hiddenpublic endpoint- To enable cross cluster service discovery, the remote secret must be created with the hidden public IP

- Pod CIDR’s must be added to

master authorized networksfor cross cluster service discovery to work - There is a bug that cluster wide mTLS options are not followed, a name space specific Peer Authentication policy must be created.

- Installation

- ASM can be installed with three methods

- Manifests

- Asmcli

- Terraform

- ASM can be installed with three methods

- Deployments

- Namespaces

- Name spaces should include the annotation

mesh.cloud.google.com/proxy: ‘{“managed”:”true”}’

- Name spaces should include the annotation

- Cert Issuer

- Cert issuer requires a leader election role that is normally assigned to the

kube-systemnamespace, due to autopilot completely locking down thekube-systemnamespace, these resources must be patched to be deployed to thecert-issuernamespace - If deploying with ACM in a repo, the

google-cas-issuercontains a CRD with a status field that isn’t supported in ACM, this field can be removed and functionality is the same - You need to wait between deploying cert-issuer,

google-cas-issuer, and custer/namespace issuers; consecutive deployments may cause a mandatory restart of the deployments. - The

cert-issuer KSAmust be annotated withiam.gke.io/gcp-service-accountto allowKSAto have permissions to request certificates from the google private certificate authority.

- Cert issuer requires a leader election role that is normally assigned to the

- Istio ingress gateway

- Istio ingress gateway can be deployed with

- Manifests generated by

istioctlvia aIstioOperator - Static yaml files

- The gateway namespace must include the

mesh.cloud.google.com/proxyannotation

- The gateway namespace must include the

- GKE Autopilot ASM and Ingress Gateway Manifests

- Service

- Must include annotations

networking.gke.io/internal-load-balancer-allow-global-access: "true"networking.gke.io/load-balancer-type: Internal

- Should be of type

LoadBalancer LoadBalancerIP should be set to a previously created internal IP or a one will be created for you

- Must include annotations

- Gateway

- Hosts in

gatewayshould be in the format of [namespace]/[host name]

- Hosts in

- VirtualService

Gatewayshould be in the format of [gateway namespace]/[gateway name]

- Service

- Manifests generated by

- Istio ingress gateway can be deployed with

- Tekton

- Autopilot has certain default resource request allocations and hard coded limits for cpu, memory, and ephemeral storage

- Namespaces

Due to the nature of tekton it is not possible to modify resource requests for its init containers, which causes some containers to exceed the 10GiB resource limit for ephemeral storage.

- A workaround is to not use tekton’s built in resource methods for handling images and repositories and perform the steps manually with workspaces

- Since autopilot is a dynamically scaling k8’s instance, generally the nodes are sized just large enough to fit in the running instances. When a new tekton pipeline is created there is a substantial delay for a new node to bootstrap until the pipeline pods can be created.

this is a great post – one note is that while i try my best to keep whereami updated in my own github repo, the best place to grab it from is https://github.com/GoogleCloudPlatform/kubernetes-engine-samples/tree/main/whereami