At work, I am responsible for collecting PLC/HMI data logs from systems, organizing and transforming the data so relative system performance can be acquired.

In the infancy of my “data science” journey, I used Microsoft Excel and its integrated Power Query program to fulfill my data analysis needs. This method had a very shallow learning curve and was for the most part graphical. I ended up using this method for the first few projects. It wasn’t until more complex projects with larger data sets that I hit the limitations of Excel’s row limit as well as the computational bottleneck of Power Query.

For my most recent project I used Power Query in Excel up until around 700,000 rows. With Excel’s 1,048,576 row limit imminently approaching, I began searching for solution that could handle the vast amounts of data that would accumulate as I was only about 3 months into a multi year project.

I then stumbled upon Microsoft Power Bi, which is usually used for business intelligence and generally revenue based data and decided to give it a shot.

Power Bi was an impressively powerful and robust tool for visualizing massive quantities of data and has various data source integrations. However its core data transformation tool consisted of Power Query, which was just too limited in functionality and vastly inefficient when large quantities of data are involved. My 700k row data file took roughly 20-30 minutes to finish transforming, this would not be acceptable when more data started to accumulate.

I looked around and ended up finding some references of people using Python and Pandas for big data. There was a steeper learning curve then Power Query since there was no GUI available and all the transformations needed to be coded, but the end result was a lightning fast and robust data cleaning and transformation tool that could handle recursive calculations with ease (something Power Query struggled with and was necessary in my application). However pandas did not have all the functionality I needed and I had to install numpy and glob.

Below is the full code to my cleaning function.

First in lines 27 through 30, I used os.path the retrieve my absolute path in order to allow the python module to be run from any location so long as the folder structure that the raw CSV data is located in is correct. I had some issues with “\” registering with python so I had to replace it with “/” (when referencing the character “\” you must put another forward slash informant “\\”). I then assigned variables to the three paths where my CSV files where located giving the ending path a “*” wild card variable, allowing all CSV files in the path to be referenced.

Lines 37 to 39, assigned variables and globed those CSV files with glob.glob

Lines 41 to 62, I appended each categories CSV’s into a single array and saved them into 3 respective data frames in pandas.

Lines 64 to 70, converted the non time stamp string format into a pandas timestamp and set each data frames index to the converted time stamp.

Lines 72 to 75, merged all three data frames together using a outer merge and filled down so that no cells had missing data. This method was done since the data set for Analog logged at 1 minute intervals consistently, Backwash every 1 second during a value change, and Operational Mode when ever a value was changed. This caused a several unique index values that were independent to each data set.

Lines 70 to 134, created additional columns that describe what step the unit was in based on a integer value.

Lines 136 to 183, removed and renamed all the columns. You can either reference the columns by their numeric index value or their name.

Lines 184 and 185, created a column that represented the recursive difference between the current and next time stamp and converted the value to minutes so that duration and volume based calculations could take place.

Lines 187 to 235, created conditional calculation columns that have values during certain scenarios for the ease of data use in Power Bi.

Lines 237 to 243, were added at a later revision. These lines of code imported a CSV file which had date times that I had assigned “Test Numbers” to in order to provide an index-able value to correlate to a testing schedule that I would import into Power Bi. I began by importing the CSV to a data frame and then converting the time stamp to a pandas date stamp and then setting that to the index. I then used pd.mergeasof to merge the two data frames, with the augment backwards, and filled down so that each row had a index-able “Test Number”.

Lines 245 to 247, printed to console that the module was saving the resulting data set into a CSV format, explorted via the df.to_csv command, and printed the number of rows exported.

With that the Python/Pandas portion of the data transformation portion was finished.

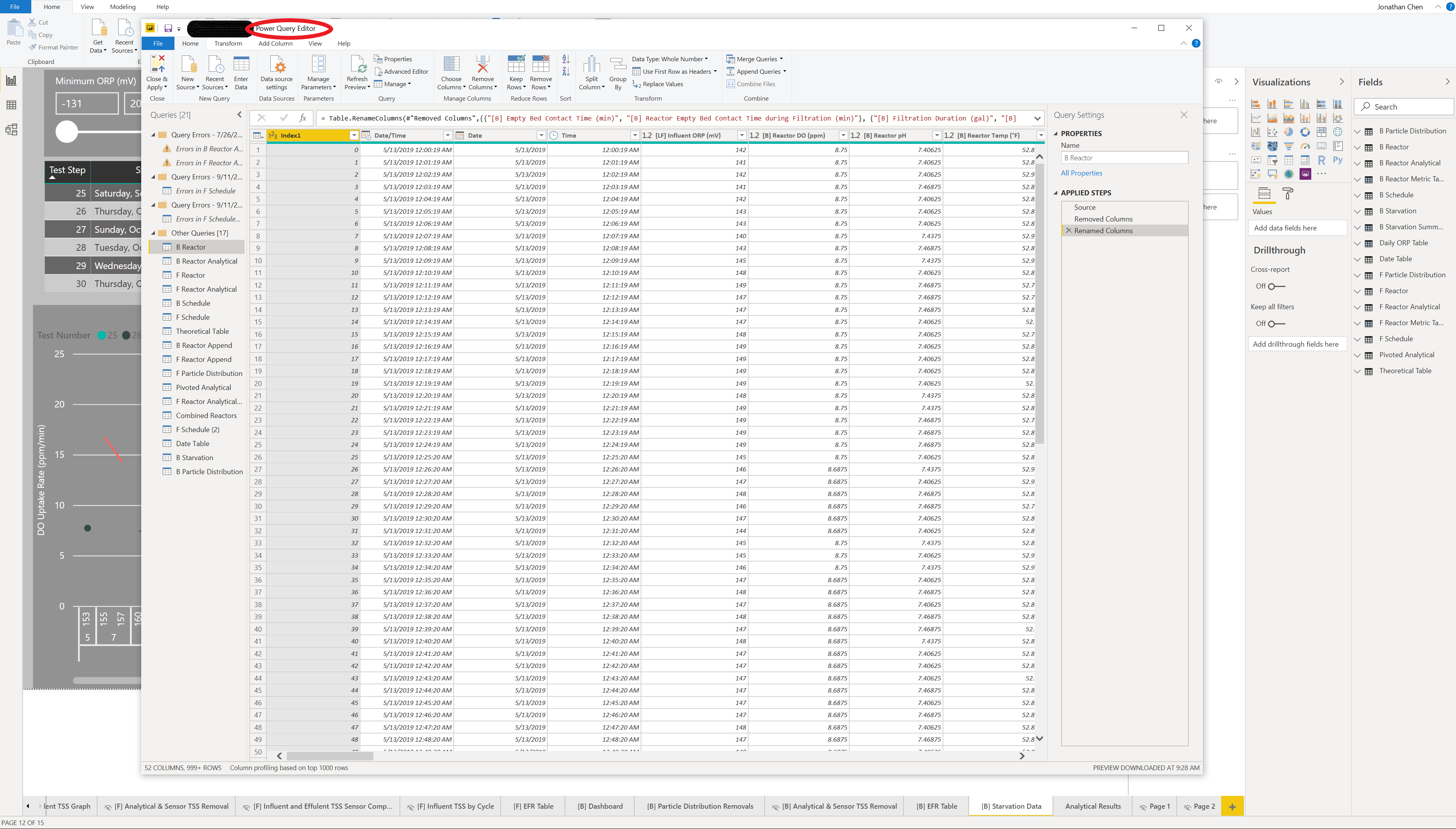

The next step was importing the “Cleaned CSV” into Power Bi and begin the final steps of data cleaning in Power Query.

The “Cleaned CSV” actually consisted of two separate units that were logged into one file, in Power Query I made 2 new referenced queries that separated these two data files, maintaining their time stamps which I had to separate into: Date/Time, Date, and Time. This was done due to the limitation of Power Bi to handle date hierarchies that included times and to prevent conflict with relating data sets that only consisted of date stamps.

From there I imported a number of associated files such as: Testing Schedule, Analytical Laboratory Data, and a Date Table. This data was then cleaned, separated and formatted for each of the two units. I also created a pivoted version of the analytical data so that a dynamic series could be used in one of my visuals.

Doing this allowed for a specific attribute to be selected and displayed on a graph rather then manually dragging and dropped the attribute from the data set every time a new attribute needed to be plotted.

The next step of the data cleaning and transformation took place in the DAX environment of Power Bi after the data import from Power Query was done. I needed to number “cycles” that our unit had been through based on certain conditions. i.e. a cycle for gas usage for your car would be driving your car X amount of miles then filling up your gas tank. You can then use the number of miles you drive and divide it by the number of gallons you filled up to determine gas mileage, given that you fill up your tank to full every time. In order to achieve this I had already created a column that displayed the state the unit was in, in its next row. This all I needed to do is create a logical function that compared the current row to the column that had the row shifted by +1 to logically determine if it was a “new cycle”. But the hardest part was numbering each of these cycles in an incremental manner and applying it to every row in that “cycle”. After several hours of googling I found the perfect function that would get the job done. It is the magical RANKX DAX function paired with a FILTER function.

I then used this to create a separate table that would summarize all the data for each “cycle”, with the SUMMERIZECOLUMNS DAX function. This allowed me to calculate metrics by each cycle rather then by date or time.

The final step of the data transformation and cleaning was defining relationship between the 16 individual data sets I had using Power Bi’s relationship tool. This part was quite frustrating because of many discontinuity errors but I found a solution which involved the “Test Number” I added in to the Pandas transform and a Date Table.

Now I have over 2 million rows of primary operation data that is directly referable to test plans, analytical data, metrics, and any other data sets I decided to add in the future.